Xi Li

Mark

SPIRIT: Adapting Vision Foundation Models for Unified Single- and Multi-Frame Infrared Small Target Detection

Feb 02, 2026Abstract:Infrared small target detection (IRSTD) is crucial for surveillance and early-warning, with deployments spanning both single-frame analysis and video-mode tracking. A practical solution should leverage vision foundation models (VFMs) to mitigate infrared data scarcity, while adopting a memory-attention-based temporal propagation framework that unifies single- and multi-frame inference. However, infrared small targets exhibit weak radiometric signals and limited semantic cues, which differ markedly from visible-spectrum imagery. This modality gap makes direct use of semantics-oriented VFMs and appearance-driven cross-frame association unreliable for IRSTD: hierarchical feature aggregation can submerge localized target peaks, and appearance-only memory attention becomes ambiguous, leading to spurious clutter associations. To address these challenges, we propose SPIRIT, a unified and VFM-compatible framework that adapts VFMs to IRSTD via lightweight physics-informed plug-ins. Spatially, PIFR refines features by approximating rank-sparsity decomposition to suppress structured background components and enhance sparse target-like signals. Temporally, PGMA injects history-derived soft spatial priors into memory cross-attention to constrain cross-frame association, enabling robust video detection while naturally reverting to single-frame inference when temporal context is absent. Experiments on multiple IRSTD benchmarks show consistent gains over VFM-based baselines and SOTA performance.

Online unsupervised Hebbian learning in deep photonic neuromorphic networks

Jan 29, 2026Abstract:While software implementations of neural networks have driven significant advances in computation, the von Neumann architecture imposes fundamental limitations on speed and energy efficiency. Neuromorphic networks, with structures inspired by the brain's architecture, offer a compelling solution with the potential to approach the extreme energy efficiency of neurobiological systems. Photonic neuromorphic networks (PNNs) are particularly attractive because they leverage the inherent advantages of light, namely high parallelism, low latency, and exceptional energy efficiency. Previous PNN demonstrations have largely focused on device-level functionalities or system-level implementations reliant on supervised learning and inefficient optical-electrical-optical (OEO) conversions. Here, we introduce a purely photonic deep PNN architecture that enables online, unsupervised learning. We propose a local feedback mechanism operating entirely in the optical domain that implements a Hebbian learning rule using non-volatile phase-change material synapses. We experimentally demonstrate this approach on a non-trivial letter recognition task using a commercially available fiber-optic platform and achieve a 100 percent recognition rate, showcasing an all-optical solution for efficient, real-time information processing. This work unlocks the potential of photonic computing for complex artificial intelligence applications by enabling direct, high-throughput processing of optical information without intermediate OEO signal conversions.

REL-SF4PASS: Panoramic Semantic Segmentation with REL Depth Representation and Spherical Fusion

Jan 23, 2026Abstract:As an important and challenging problem in computer vision, Panoramic Semantic Segmentation (PASS) aims to give complete scene perception based on an ultra-wide angle of view. Most PASS methods often focus on spherical geometry with RGB input or using the depth information in original or HHA format, which does not make full use of panoramic image geometry. To address these shortcomings, we propose REL-SF4PASS with our REL depth representation based on cylindrical coordinate and Spherical-dynamic Multi-Modal Fusion SMMF. REL is made up of Rectified Depth, Elevation-Gained Vertical Inclination Angle, and Lateral Orientation Angle, which fully represents 3D space in cylindrical coordinate style and the surface normal direction. SMMF aims to ensure the diversity of fusion for different panoramic image regions and reduce the breakage of cylinder side surface expansion in ERP projection, which uses different fusion strategies to match the different regions in panoramic images. Experimental results show that REL-SF4PASS considerably improves performance and robustness on popular benchmark, Stanford2D3D Panoramic datasets. It gains 2.35% average mIoU improvement on all 3 folds and reduces the performance variance by approximately 70% when facing 3D disturbance.

MapViT: A Two-Stage ViT-Based Framework for Real-Time Radio Quality Map Prediction in Dynamic Environments

Jan 22, 2026Abstract:Recent advancements in mobile and wireless networks are unlocking the full potential of robotic autonomy, enabling robots to take advantage of ultra-low latency, high data throughput, and ubiquitous connectivity. However, for robots to navigate and operate seamlessly, efficiently and reliably, they must have an accurate understanding of both their surrounding environment and the quality of radio signals. Achieving this in highly dynamic and ever-changing environments remains a challenging and largely unsolved problem. In this paper, we introduce MapViT, a two-stage Vision Transformer (ViT)-based framework inspired by the success of pre-train and fine-tune paradigm for Large Language Models (LLMs). MapViT is designed to predict both environmental changes and expected radio signal quality. We evaluate the framework using a set of representative Machine Learning (ML) models, analyzing their respective strengths and limitations across different scenarios. Experimental results demonstrate that the proposed two-stage pipeline enables real-time prediction, with the ViT-based implementation achieving a strong balance between accuracy and computational efficiency. This makes MapViT a promising solution for energy- and resource-constrained platforms such as mobile robots. Moreover, the geometry foundation model derived from the self-supervised pre-training stage improves data efficiency and transferability, enabling effective downstream predictions even with limited labeled data. Overall, this work lays the foundation for next-generation digital twin ecosystems, and it paves the way for a new class of ML foundation models driving multi-modal intelligence in future 6G-enabled systems.

Air-Chamber Based Soft Six-Axis Force/Torque Sensor for Human-Robot Interaction

Nov 17, 2025

Abstract:Soft multi-axis force/torque sensors provide safe and precise force interaction. Capturing the complete degree-of-freedom of force is imperative for accurate force measurement with six-axis force/torque sensors. However, cross-axis coupling can lead to calibration issues and decreased accuracy. In this instance, developing a soft and accurate six-axis sensor is a challenging task. In this paper, a soft air-chamber type six-axis force/torque sensor with 16-channel barometers is introduced, which housed in hyper-elastic air chambers made of silicone rubber. Additionally, an effective decoupling method is proposed, based on a rigid-soft hierarchical structure, which reduces the six-axis decoupling problem to two three-axis decoupling problems. Finite element model simulation and experiments demonstrate the compatibility of the proposed approach with reality. The prototype's sensing performance is quantitatively measured in terms of static load response, dynamic load response and dynamic response characteristic. It possesses a measuring range of 50 N force and 1 Nm torque, and the average deviation, repeatability, non-linearity and hysteresis are 4.9$\%$, 2.7$\%$, 5.8$\%$ and 6.7$\%$, respectively. The results indicate that the prototype exhibits satisfactory sensing performance while maintaining its softness due to the presence of soft air chambers.

Innovative Design of Multi-functional Supernumerary Robotic Limbs with Ellipsoid Workspace Optimization

Nov 15, 2025

Abstract:Supernumerary robotic limbs (SRLs) offer substantial potential in both the rehabilitation of hemiplegic patients and the enhancement of functional capabilities for healthy individuals. Designing a general-purpose SRL device is inherently challenging, particularly when developing a unified theoretical framework that meets the diverse functional requirements of both upper and lower limbs. In this paper, we propose a multi-objective optimization (MOO) design theory that integrates grasping workspace similarity, walking workspace similarity, braced force for sit-to-stand (STS) movements, and overall mass and inertia. A geometric vector quantification method is developed using an ellipsoid to represent the workspace, aiming to reduce computational complexity and address quantification challenges. The ellipsoid envelope transforms workspace points into ellipsoid attributes, providing a parametric description of the workspace. Furthermore, the STS static braced force assesses the effectiveness of force transmission. The overall mass and inertia restricts excessive link length. To facilitate rapid and stable convergence of the model to high-dimensional irregular Pareto fronts, we introduce a multi-subpopulation correction firefly algorithm. This algorithm incorporates a strategy involving attractive and repulsive domains to effectively handle the MOO task. The optimized solution is utilized to redesign the prototype for experimentation to meet specified requirements. Six healthy participants and two hemiplegia patients participated in real experiments. Compared to the pre-optimization results, the average grasp success rate improved by 7.2%, while the muscle activity during walking and STS tasks decreased by an average of 12.7% and 25.1%, respectively. The proposed design theory offers an efficient option for the design of multi-functional SRL mechanisms.

DiscoX: Benchmarking Discourse-Level Translation task in Expert Domains

Nov 14, 2025

Abstract:The evaluation of discourse-level translation in expert domains remains inadequate, despite its centrality to knowledge dissemination and cross-lingual scholarly communication. While these translations demand discourse-level coherence and strict terminological precision, current evaluation methods predominantly focus on segment-level accuracy and fluency. To address this limitation, we introduce DiscoX, a new benchmark for discourse-level and expert-level Chinese-English translation. It comprises 200 professionally-curated texts from 7 domains, with an average length exceeding 1700 tokens. To evaluate performance on DiscoX, we also develop Metric-S, a reference-free system that provides fine-grained automatic assessments across accuracy, fluency, and appropriateness. Metric-S demonstrates strong consistency with human judgments, significantly outperforming existing metrics. Our experiments reveal a remarkable performance gap: even the most advanced LLMs still trail human experts on these tasks. This finding validates the difficulty of DiscoX and underscores the challenges that remain in achieving professional-grade machine translation. The proposed benchmark and evaluation system provide a robust framework for more rigorous evaluation, facilitating future advancements in LLM-based translation.

End to End AI System for Surgical Gesture Sequence Recognition and Clinical Outcome Prediction

Nov 14, 2025Abstract:Fine-grained analysis of intraoperative behavior and its impact on patient outcomes remain a longstanding challenge. We present Frame-to-Outcome (F2O), an end-to-end system that translates tissue dissection videos into gesture sequences and uncovers patterns associated with postoperative outcomes. Leveraging transformer-based spatial and temporal modeling and frame-wise classification, F2O robustly detects consecutive short (~2 seconds) gestures in the nerve-sparing step of robot-assisted radical prostatectomy (AUC: 0.80 frame-level; 0.81 video-level). F2O-derived features (gesture frequency, duration, and transitions) predicted postoperative outcomes with accuracy comparable to human annotations (0.79 vs. 0.75; overlapping 95% CI). Across 25 shared features, effect size directions were concordant with small differences (~ 0.07), and strong correlation (r = 0.96, p < 1e-14). F2O also captured key patterns linked to erectile function recovery, including prolonged tissue peeling and reduced energy use. By enabling automatic interpretable assessment, F2O establishes a foundation for data-driven surgical feedback and prospective clinical decision support.

MultiCrafter: High-Fidelity Multi-Subject Generation via Spatially Disentangled Attention and Identity-Aware Reinforcement Learning

Sep 26, 2025Abstract:Multi-subject image generation aims to synthesize user-provided subjects in a single image while preserving subject fidelity, ensuring prompt consistency, and aligning with human aesthetic preferences. However, existing methods, particularly those built on the In-Context-Learning paradigm, are limited by their reliance on simple reconstruction-based objectives, leading to both severe attribute leakage that compromises subject fidelity and failing to align with nuanced human preferences. To address this, we propose MultiCrafter, a framework that ensures high-fidelity, preference-aligned generation. First, we find that the root cause of attribute leakage is a significant entanglement of attention between different subjects during the generation process. Therefore, we introduce explicit positional supervision to explicitly separate attention regions for each subject, effectively mitigating attribute leakage. To enable the model to accurately plan the attention region of different subjects in diverse scenarios, we employ a Mixture-of-Experts architecture to enhance the model's capacity, allowing different experts to focus on different scenarios. Finally, we design a novel online reinforcement learning framework to align the model with human preferences, featuring a scoring mechanism to accurately assess multi-subject fidelity and a more stable training strategy tailored for the MoE architecture. Experiments validate that our framework significantly improves subject fidelity while aligning with human preferences better.

IGFuse: Interactive 3D Gaussian Scene Reconstruction via Multi-Scans Fusion

Aug 18, 2025

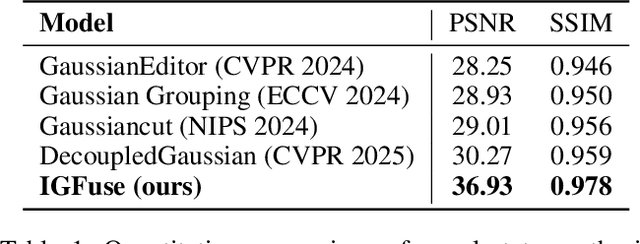

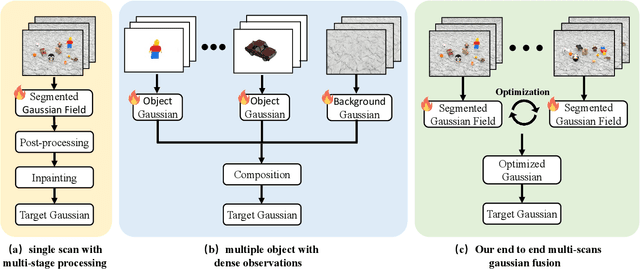

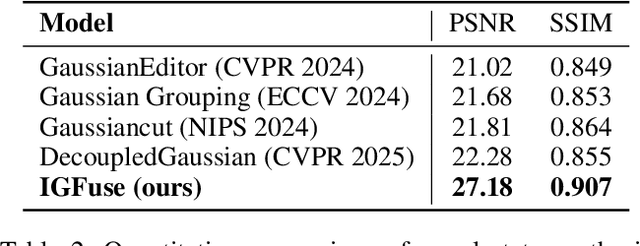

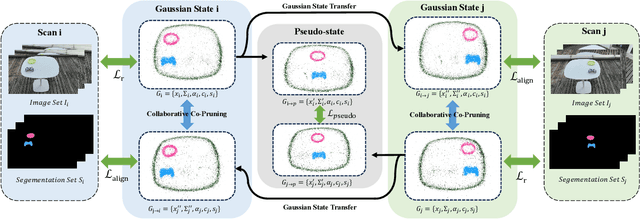

Abstract:Reconstructing complete and interactive 3D scenes remains a fundamental challenge in computer vision and robotics, particularly due to persistent object occlusions and limited sensor coverage. Multiview observations from a single scene scan often fail to capture the full structural details. Existing approaches typically rely on multi stage pipelines, such as segmentation, background completion, and inpainting or require per-object dense scanning, both of which are error-prone, and not easily scalable. We propose IGFuse, a novel framework that reconstructs interactive Gaussian scene by fusing observations from multiple scans, where natural object rearrangement between captures reveal previously occluded regions. Our method constructs segmentation aware Gaussian fields and enforces bi-directional photometric and semantic consistency across scans. To handle spatial misalignments, we introduce a pseudo-intermediate scene state for unified alignment, alongside collaborative co-pruning strategies to refine geometry. IGFuse enables high fidelity rendering and object level scene manipulation without dense observations or complex pipelines. Extensive experiments validate the framework's strong generalization to novel scene configurations, demonstrating its effectiveness for real world 3D reconstruction and real-to-simulation transfer. Our project page is available online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge